Introduction

As interactions get more complex, we’ve away from physical interfaces (control panels with knobs and buttons) to software interfaces. And that’s a great thing: software UI are not constrained by physical space and can adapt to be whatever is needed.

However, we also lost something. Physical interfaces are approachable, tactile, and have immediate physical feedback.

With Augmented Reality, where the digital is layered onto the real world, we can leverage both physicality of objects and the versatility of software.

Case Study

Most current AR designs are simply taking 2D touch interfaces and projecting them onto 3D environments. Our case study explores how we can forgo the traditional 2D UI model for something more tangible.

Each song in the music queue is treated as a physical object people can directly interact with: lift, move, drop, arrange as they wish.

They are small enough to fit in virtual space, and large enough to be easily manipulated.

Some ideas we explored were “boxes” or “buckets” to facilitate exploration. For 2D UI, we have an endlessly scrolling queue. For AR, why can’t we have an bucket that never empties, where people can continually pick up new songs or albums to explore.

Process

Prototyping for AR is incredibly hard. Most prototyping tools are meant for 2D screens, and the tools meant for AR, like Unity into HoloLens are very buggy and time-consuming.

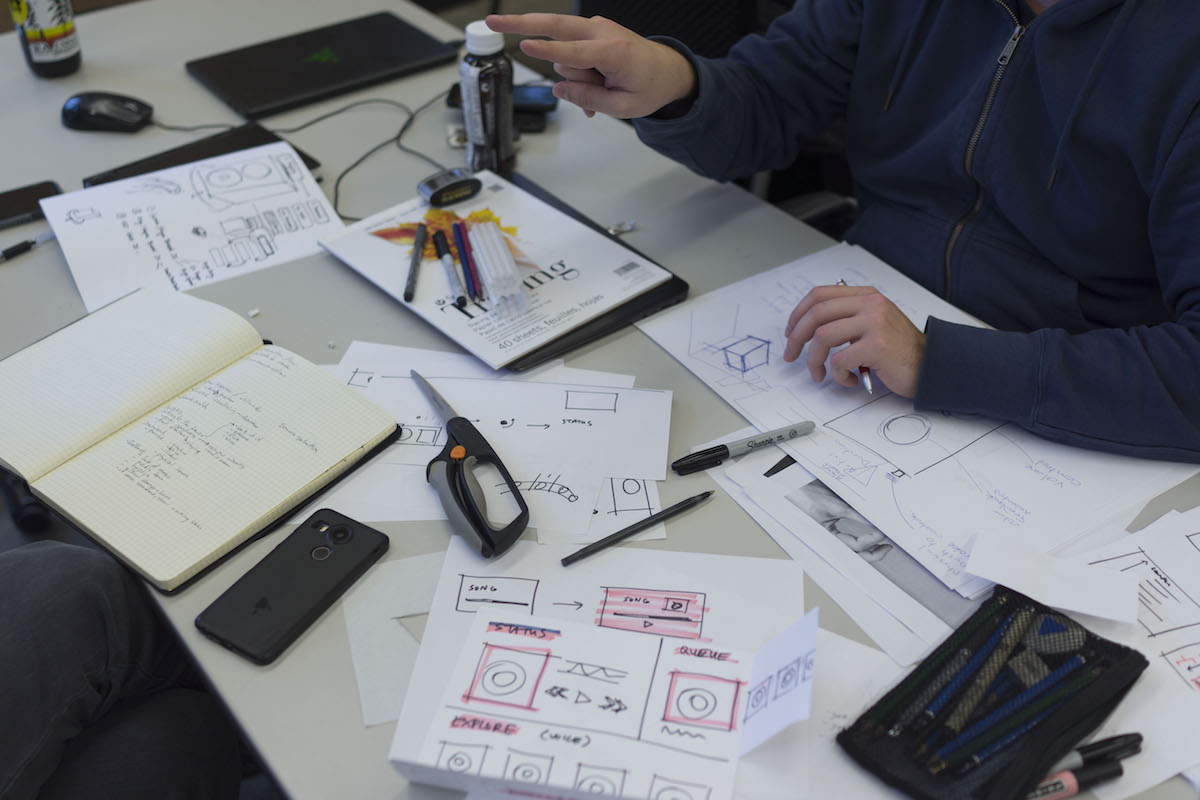

For the early stages, we used paper prototypes that we can move around.

Later, we used the Surface’s model viewer and HoloLens to get a more representative understanding of our designs.

Lessons Learned

Going into this case study, we thought it would be easy. The status-quo is horrible — people are simply moving over their traditional 2D UI into AR. We are just going to come in and make everything 3D and bring back all these physical affordances.

That didn’t work. We quickly realized that many interactions, especially relating to music do not have a physical counterpart. For example, there is no physical version of a music queue that you can easily manipulate.

Here is a screenshot of Spotify’s UI:

It turned out that a lot of our interactions now, while initially rooted in physicality, are now native to the digital realm.

That is the biggest lesson learned. Yes, there is an opportunity to add physical affordances to AR interfaces, but the answers are not as obvious. It will take much more exploration to find a consistently great interaction paradigm for this medium.